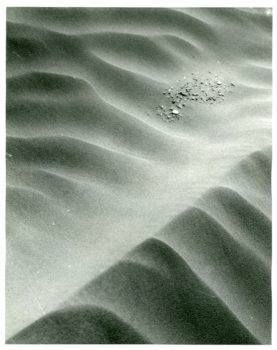

My 52 Week Photo Challenge

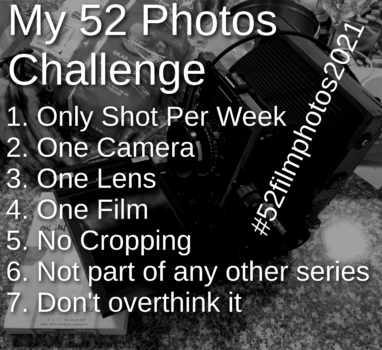

Happy New Year! We made it! For the first morning of the year, I woke up with an idea I wanted to do a 52 week photo challenge. Not a new idea by any means but nonetheless, here’s my “rules” : 1. Only Shot Per Week (One Exposure)2. One Camera …